MCP: Anthropic’s Secret Weapon for Building Superpowered LLM Agents

Arnab das

7 min read · Jun 9, 2025

Introduction: The Missing Link in AI Development

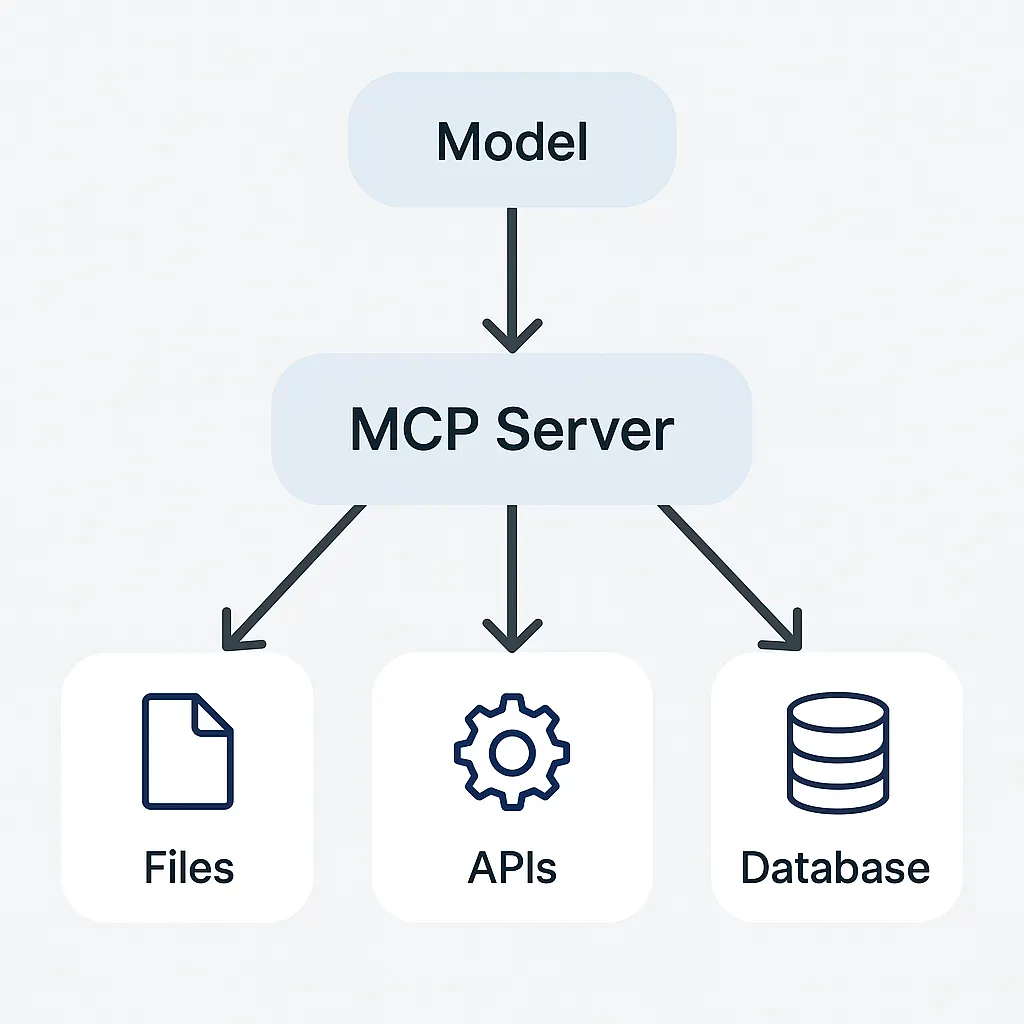

Imagine building an AI assistant that doesn’t just chat, but acts — one that can access your local files, call external APIs, query databases, and integrate with your favourite tools — all through a single, standardised protocol. What used to take months of custom integration, patchwork scripts, and sleepless nights now takes minutes.

Enter Model Context Protocol (MCP) by Anthropic: a game-changing framework that transforms your LLM from a passive conversationalist into a real-time, decision-making agent. MCP breaks the silos, eliminates vendor lock-in, and paves the way for secure, flexible, and scalable AI interactions. In this blog, we’ll pop the hood on MCP — how it works, why it matters, and how it’s reshaping the future of intelligent software.

How Anthropic Changed the Game

Before MCP, developers faced a fragmented landscape where each LLM provider required different integration approaches. You’d build custom connectors for Claude, different ones for GPT, and entirely separate solutions for other models. This created:

- Vendor Lock-in: Switching LLM providers meant rebuilding integrations

- Security Nightmares: Each custom integration introduced new vulnerabilities

- Development Overhead: Teams spent more time on plumbing than on innovation

- Inconsistent Experiences: Different capabilities across different platforms

Anthropic recognised this friction and designed MCP as an open standard that any LLM provider can adopt. Think of it as the “USB protocol” for AI integrations — one standard interface that works everywhere.

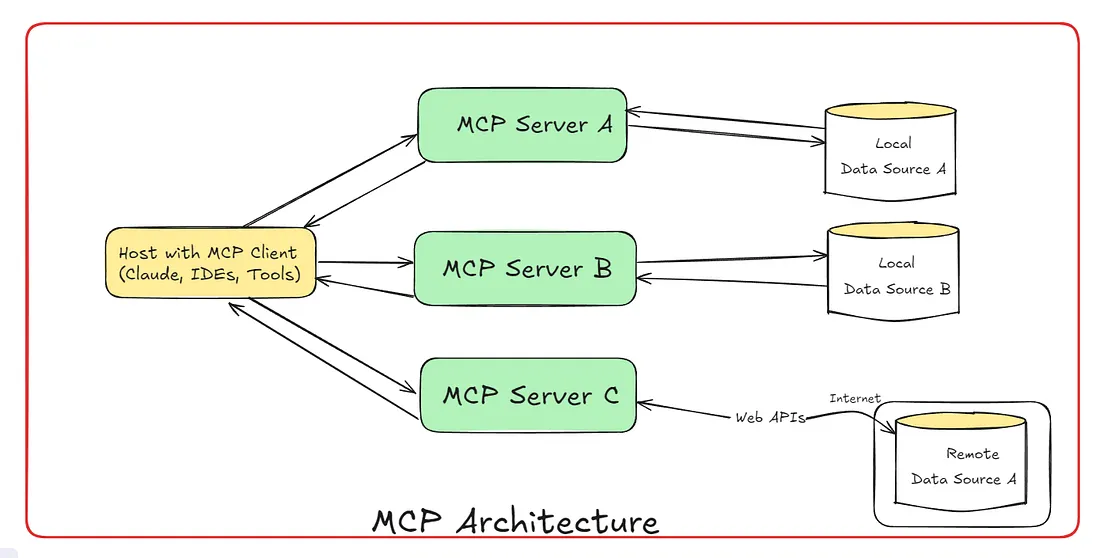

The Architecture: Understanding MCP’s Foundation

MCP follows an elegant client-server architecture that enables one host to connect to multiple specialized servers, each handling different data sources and capabilities:

At its core, MCP creates a hub-and-spoke model where:

- One Host (like Claude Desktop or your IDE) acts as the central coordination point

- Multiple MCP Servers each specialize in specific domains or data sources

- Local and Remote Data Sources are accessed securely through their respective servers

- The Internet boundary is clearly defined, with remote services accessed via dedicated servers

Key Components Explained:

- Host with MCP Client The central application that users interact with directly:

- Claude Desktop: Anthropic’s flagship implementation with built-in MCP support

- IDEs: Code editors like Cursor and Windsurf, integrating MCP capabilities

- AI Tools: Custom applications and workflow automation tools

- Built-in MCP Client: Handles all protocol communications and server connections

- MCP Servers (A, B, C) Specialized, lightweight programs that each focus on specific domains:

- MCP Server A: Could handle file system operations and local documents

- MCP Server B: Might manage database connections and queries

- MCP Server C: Could integrate with web APIs and remote services

- Each server maintains a 1:1 connection with the host

- Servers are stateless and easily deployable

- Data Sources The actual repositories of information that servers access:

- Local Data Sources: Files, databases, and services on your computer

- Remote Data Sources: External systems accessible over the internet

- Secure Access: Each server only accesses its designated data sources

- Clear Boundaries: Local vs. remote access is explicitly managed.

Why Developers Are Obsessed

- Build once, run anywhere: Same MCP server works with Claude/GPT/Llama.

- Security-first: Your CRM data never hits an external LLM API.

- Extend in minutes: Add a new tool? Just write a tiny MCP server.

- Cursor/Windsurf synergy: Build IDE agents that understand your codebase.

Getting Started: Your First MCP Integration

Step 1: Choose Your Host

# Install Claude Desktop (includes MCP support)

brew install claude-desktop

# Or integrate with Cursor/Windsurf

# Download from their respective websitesStep 2: Set Up a Server

package main

import (

"context"

"encoding/json"

"fmt"

"log"

"github.com/anthropic/mcp-go/pkg/mcp"

"github.com/anthropic/mcp-go/pkg/types"

)

type MyServer struct {

*mcp.Server

}

func (s *MyServer) ListResources(ctx context.Context) ([]*types.Resource, error) {

return []*types.Resource{

{

URI: "config://settings",

Name: "App Settings",

Description: "Application configuration",

MimeType: "application/json",

},

}, nil

}

func (s *MyServer) ListTools(ctx context.Context) ([]*types.Tool, error) {

return []*types.Tool{

{

Name: "get_weather",

Description: "Get current weather information",

InputSchema: map[string]interface{}{

"type": "object",

"properties": map[string]interface{}{

"location": map[string]interface{}{

"type": "string",

"description": "City name or coordinates",

},

},

"required": []string{"location"},

},

},

}, nil

}

func (s *MyServer) CallTool(ctx context.Context, name string, args map[string]interface{}) (*types.ToolResult, error) {

switch name {

case "get_weather":

location := args["location"].(string)

// Simulate weather API call

weather := map[string]interface{}{

"location": location,

"temperature": "22°C",

"condition": "Sunny",

"humidity": "65%",

}

result, _ := json.Marshal(weather)

return &types.ToolResult{

Content: []types.Content{

{

Type: "text",

Text: string(result),

},

},

}, nil

default:

return nil, fmt.Errorf("unknown tool: %s", name)

}

}

func main() {

server := &MyServer{

Server: mcp.NewServer("weather-server", "1.0.0"),

}

// Register handlers

server.SetResourceHandler(server.ListResources)

server.SetToolHandler(server.ListTools, server.CallTool)

// Start server

log.Println("Starting MCP server...")

if err := server.Serve(); err != nil {

log.Fatal(err)

}

}Step 3: Configure Connection

For Go-based MCP Servers First, build your Go server:

# Build the Go server

go build -o weather-server main.go

# Or with specific build flags for production

go build -ldflags="-s -w" -o weather-server main.go

# For cross-platform builds

GOOS=linux GOARCH=amd64 go build -o weather-server-linux main.go

GOOS=windows GOARCH=amd64 go build -o weather-server.exe main.goConfiguration Examples

Basic Go Server Configuration:

{

"mcpServers": {

"weather-server": {

"command": "./weather-server",

"args": ["--port", "8080", "--debug"],

"cwd": "/usr/local/bin/mcp-servers",

"env": {

"GO_ENV": "production",

"API_KEY": "${WEATHER_API_KEY}"

}

}

}

}Go Server with Command Line Arguments Your Go server can accept configuration via CLI:

package main

import (

"flag"

"log"

"os"

"github.com/anthropic/mcp-go/pkg/mcp"

)

func main() {

var (

port = flag.Int("port", 8080, "Server port")

debug = flag.Bool("debug", false, "Enable debug mode")

configFile = flag.String("config", "", "Configuration file path")

rootDir = flag.String("root-dir", ".", "Root directory for file operations")

)

flag.Parse()

if *debug {

log.SetLevel(log.DebugLevel)

}

server := mcp.NewServer("go-mcp-server", "1.0.0")

// Use the flags to configure your server

log.Printf("Starting server on port %d", *port)

log.Printf("Root directory: %s", *rootDir)

if err := server.Serve(); err != nil {

log.Fatal(err)

}

}Environment-Based Configuration

config.yaml for Go server:

server:

name: "production-server"

version: "1.0.0"

port: 8080

database:

host: ${DB_HOST}

port: ${DB_PORT}

name: ${DB_NAME}

user: ${DB_USER}

password: ${DB_PASSWORD}

features:

enable_caching: true

max_connections: 100

timeout: "30s"Loading config in Go:

package main

import (

"os"

"gopkg.in/yaml.v2"

)

type Config struct {

Server struct {

Name string `yaml:"name"`

Version string `yaml:"version"`

Port int `yaml:"port"`

} `yaml:"server"`

Database struct {

Host string `yaml:"host"`

Port int `yaml:"port"`

Name string `yaml:"name"`

User string `yaml:"user"`

Password string `yaml:"password"`

} `yaml:"database"`

}

func loadConfig(filename string) (*Config, error) {

data, err := os.ReadFile(filename)

if err != nil {

return nil, err

}

// Expand environment variables

data = []byte(os.ExpandEnv(string(data)))

var config Config

if err := yaml.Unmarshal(data, &config); err != nil {

return nil, err

}

return &config, nil

}Step 4: Advanced Go Server with Database Integration

package main

import (

"context"

"database/sql"

"encoding/json"

"fmt"

"log"

_ "github.com/lib/pq"

"github.com/anthropic/mcp-go/pkg/mcp"

"github.com/anthropic/mcp-go/pkg/types"

)

type DatabaseServer struct {

*mcp.Server

db *sql.DB

}

func NewDatabaseServer() *DatabaseServer {

// Initialize database connection

db, err := sql.Open("postgres", "postgres://user:pass@localhost/mydb?sslmode=disable")

if err != nil {

log.Fatal(err)

}

return &DatabaseServer{

Server: mcp.NewServer("database-server", "1.0.0"),

db: db,

}

}

func (s *DatabaseServer) ListTools(ctx context.Context) ([]*types.Tool, error) {

return []*types.Tool{

{

Name: "execute_query",

Description: "Execute SQL query on database",

InputSchema: map[string]interface{}{

"type": "object",

"properties": map[string]interface{}{

"query": map[string]interface{}{

"type": "string",

"description": "SQL query to execute",

},

"params": map[string]interface{}{

"type": "array",

"description": "Query parameters",

"items": map[string]interface{}{"type": "string"},

},

},

"required": []string{"query"},

},

},

{

Name: "get_table_schema",

Description: "Get schema information for a table",

InputSchema: map[string]interface{}{

"type": "object",

"properties": map[string]interface{}{

"table_name": map[string]interface{}{

"type": "string",

"description": "Name of the table",

},

},

"required": []string{"table_name"},

},

},

}, nil

}

func (s *DatabaseServer) CallTool(ctx context.Context, name string, args map[string]interface{}) (*types.ToolResult, error) {

switch name {

case "execute_query":

query := args["query"].(string)

rows, err := s.db.Query(query)

if err != nil {

return &types.ToolResult{

IsError: true,

Content: []types.Content{

{

Type: "text",

Text: fmt.Sprintf("Query error: %v", err),

},

},

}, nil

}

defer rows.Close()

// Process results

columns, _ := rows.Columns()

var results []map[string]interface{}

for rows.Next() {

values := make([]interface{}, len(columns))

valuePtrs := make([]interface{}, len(columns))

for i := range columns {

valuePtrs[i] = &values[i]

}

rows.Scan(valuePtrs...)

row := make(map[string]interface{})

for i, col := range columns {

row[col] = values[i]

}

results = append(results, row)

}

resultJSON, _ := json.MarshalIndent(results, "", " ")

return &types.ToolResult{

Content: []types.Content{

{

Type: "text",

Text: string(resultJSON),

},

},

}, nil

case "get_table_schema":

tableName := args["table_name"].(string)

query := `

SELECT column_name, data_type, is_nullable, column_default

FROM information_schema.columns

WHERE table_name = $1

ORDER BY ordinal_position

`

rows, err := s.db.Query(query, tableName)

if err != nil {

return &types.ToolResult{

IsError: true,

Content: []types.Content{

{

Type: "text",

Text: fmt.Sprintf("Schema query error: %v", err),

},

},

}, nil

}

defer rows.Close()

var schema []map[string]interface{}

for rows.Next() {

var colName, dataType, nullable, defaultVal sql.NullString

rows.Scan(&colName, &dataType, &nullable, &defaultVal)

schema = append(schema, map[string]interface{}{

"column_name": colName.String,

"data_type": dataType.String,

"is_nullable": nullable.String,

"column_default": defaultVal.String,

})

}

schemaJSON, _ := json.MarshalIndent(schema, "", " ")

return &types.ToolResult{

Content: []types.Content{

{

Type: "text",

Text: string(schemaJSON),

},

},

}, nil

default:

return nil, fmt.Errorf("unknown tool: %s", name)

}

}

func main() {

server := NewDatabaseServer()

defer server.db.Close()

// Register handlers

server.SetToolHandler(server.ListTools, server.CallTool)

log.Println("Starting Database MCP server...")

if err := server.Serve(); err != nil {

log.Fatal(err)

}

}The Future of AI Integration

MCP represents more than a protocol — it’s the foundation for a new era of AI applications. As the ecosystem grows, we can expect:

Industry Standardization

Major LLM providers are already showing interest in adopting MCP as the standard integration protocol. This means:

- Unified development experience across different AI platforms

- Reduced fragmentation in the AI tooling ecosystem

- Easier migration between AI providers

Enterprise Integration

Deep integration with business systems will become seamless:

- ERP Systems: Direct integration with SAP, Oracle, and Microsoft Dynamics

- CRM Platforms: Native Salesforce, HubSpot, and Pipedrive connections

- Data Warehouses: Direct access to Snowflake, BigQuery, and Redshift

- Security Compliance: Built-in audit trails and permission management

Advanced Workflows

Multi-agent systems and complex workflows built on MCP foundations:

- Agent Orchestration: Multiple AI agents working together through MCP

- Workflow Automation: Complex business processes with AI decision points

- Real-time Collaboration: Live data sharing between different AI systems

- Edge Computing: Lightweight MCP servers running on IoT devices

Conclusion: The Protocol That Changes Everything

The Model Context Protocol isn’t just solving today’s integration problems — it’s enabling tomorrow’s AI possibilities. By providing a standardized, secure, and flexible way for LLMs to interact with the world, MCP is democratizing AI development and unleashing creative potential.

Whether you’re building the next breakthrough AI application or simply want your LLM to access your local files, MCP provides the foundation you need. The future of AI integration is here, and it’s more accessible than ever.