Diving Deep into Docker: Beyond the Basic

Arnab das

7 min read · Aug 22, 2023

In today’s rapidly evolving tech landscape, developers are constantly seeking tools that enhance flexibility, consistency, and scalability in application development. Enter Docker — a tool that has emerged as a game-changer for software professionals around the world.

At its core, Docker is a platform that allows developers to automate the deployment of applications inside lightweight, portable containers.

What is a container?

A container is a sandboxed process running on a host machine that is isolated from all other processes running on that host machine. That isolation leverages kernel namespaces and cgroups, features that have been in Linux for a long time. Docker makes these capabilities approachable and easy to use. To summarize, a container:

- Is a runnable instance of an image. You can create, start, stop, move, or delete a container using the Docker API or CLI.

- Can be run on local machines, virtual machines, or deployed to the cloud.

- Is portable (and can be run on any OS).

- Is isolated from other containers and runs its own software, binaries, configurations, etc.

What is an Image?

A running container uses an isolated filesystem. This isolated filesystem is provided by an image, and the image must contain everything needed to run an application — all dependencies, configurations, scripts, binaries, etc. The image also contains other configurations for the container, such as environment variables, a default command to run, and other metadata.

Why use Docker?

Using Docker can help you ship your code faster, gives you control over your applications. You can deploy applications on containers that make it easier for them to be deployed, scaled, perform rollbacks and identify issues. It also helps in saving money by utilizing resources. Docker-based applications can be seamlessly moved from local development machines to production deployments. You can use Docker for Microservices, Data Processing, Continuous Integration and Delivery, Containers as a Service.

Docker Architecture

Docker uses a client-server architecture. The Docker client talks to the Docker daemon, which does the heavy lifting of building, running, and distributing your Docker containers.

, which carries them out. The docker command uses the Docker API. The Docker client can communicate with more than one daemon.

Docker registries

A Docker registry stores Docker images. Docker Hub is a public registry that anyone can use, and Docker looks for images on Docker Hub by default. You can even run your own private registry.

When you use the docker pull or docker run commands, Docker pulls the required images from your configured registry. When you use the docker push command, Docker pushes your image to your configured registry.

Images

An image is a read-only template with instructions for creating a Docker container. Often, an image is based on another image, with some additional customization. For example, you may build an image which is based on the ubuntu image, but installs the Apache web server and your application, as well as the configuration details needed to make your application run.

You might create your own images or you might only use those created by others and published in a registry. To build your own image, you create a Dockerfile with a simple syntax for defining the steps needed to create the image and run it.

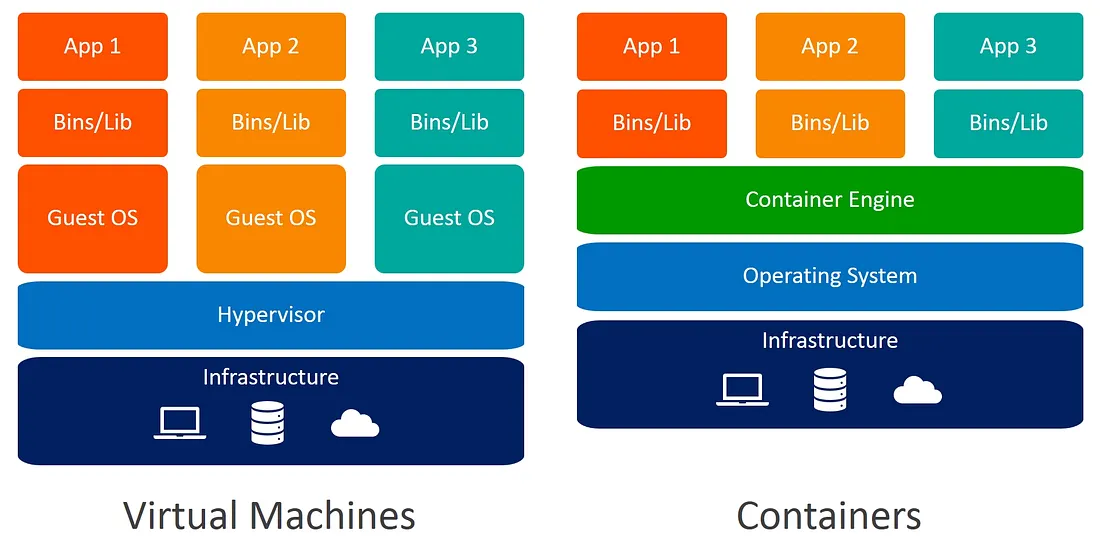

Containerization vs Virtualization

When we talk about deploying applications on Virtual Machines (VMs), we’re dealing with a layered approach. At the base, you have the physical hardware, and on top of it, there’s the host operating system. The next layer is where things get interesting — the hypervisor. The hypervisor sits between the hardware and one or multiple guest operating systems, allowing for the creation of multiple isolated virtual environments, known as VMs.

Here’s the thing with VMs: each VM thinks it’s running on its own physical machine. So, every VM includes not just the application and its dependencies, but also a full-blown guest operating system. This means there’s quite a bit of overhead!

Enter containers. Containers strip away that overhead by virtualizing at the OS-level. With containerization, instead of virtualizing the entire hardware stack as VMs do, you’re just virtualizing the OS. So, an application in a container bundles only its own bits, including its specific libraries and dependencies — but not an entire OS. This makes containers incredibly lightweight and portable. They encapsulate the application in a consistent environment, which can be effortlessly moved and deployed across different stages of development, irrespective of the underlying host system.

Dockerfile

Now it’s time to talk about the most important thing: how you create your own Dockerfile.

A Dockerfile describes steps to create a Docker image. It’s like a recipe with all ingredients and steps necessary in making your dish. This file can be used to create Docker Image. These images can be pulled to create containers in any environment. These images can also be stored online at Docker Hub. When you run a docker image you get docker containers. The container will have the application with all its dependencies.

Create a file named Dockerfile

Then create a simple index.html file:

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<title>Document</title>

</head>

<body>

<div id="arnab">

Hello everyone , you are all welcome to Understand & Learn Docker

</div>

<style>

html {

height: 100%;

width: 100%;

}

#arnab{

display: flex;

justify-content: center;

align-items: center;

}

</style>

</body>

</html>By default on building, docker searches for ‘Dockerfile’

Or use Linux commands:

touch Dockerfile

vi Dockerfile

# then press insert key, paste the code below & quit using esc :wq# the official Nginx image as a base

FROM nginx

# Copy our custom HTML file to the Nginx HTML directory

COPY index.html /usr/share/nginx/html

# Expose port 80

EXPOSE 80- Now it's time to build the Dockerfile:

docker build . -t dockertutorial:latest- Now let's run the container:

docker run -d -p 8080:80 dockertutorial:latest-

Now you can see your container running on the local host 🎉.

-

To see the running container you can use

docker psto see the container id, image, size, port No. etc. -

Also you can use

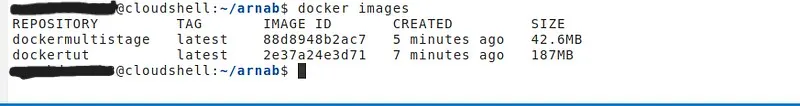

docker imagescommand to see the list of images.

Docker Multistage Builds

Multistage builds in Docker are a powerful way to minimize the size of the final image by using intermediate containers for tasks like compilation or fetching dependencies, and then transferring only the necessary artifacts to the final image.

For the Nginx “Hello World” example, the size savings might not be as profound as they would be for a complex application, but I’ll demonstrate the principle regardless.

Here’s an example using a multistage build:

Now change the Dockerfile:

# First stage: Base Nginx on Alpine

FROM nginx:alpine AS base

COPY index.html /tmp/

# Final stage: Use a fresh Nginx Alpine image

FROM nginx:alpine

# Only copy the HTML from the first stage

COPY --from=base /tmp/index.html /usr/share/nginx/html/index.html

EXPOSE 80Now follow the same steps to build & run the container:

docker build . -t dockermultistage:latest

docker run -d -p 8080:80 dockermultistage:latestNow the magic happens!

So here we reduce the docker image size — it helps us in production, where building this Dockerfile requires less time.

Security Best Practices for Dockerfiles

- Choosing the right base image from a trusted source and keeping it small

- Using multi-stage builds

- Rebuilding images

- Check your image for vulnerabilities

- Also you can refer to the official documents to deal with security related issues

In Conclusion

Docker has revolutionized the way we think about software development and deployment. By introducing lightweight, portable, and consistent containerized environments, it bridges the gap between development, testing, and production, ensuring that “it works on my machine” is a phrase of the past. As you venture deeper into the world of containerization, remember: Docker isn’t just a tool; it’s a paradigm shift in how we handle software lifecycles. Embrace the container wave, and witness a smoother, more efficient development journey!